Prompt Engineering for Enterprise AI

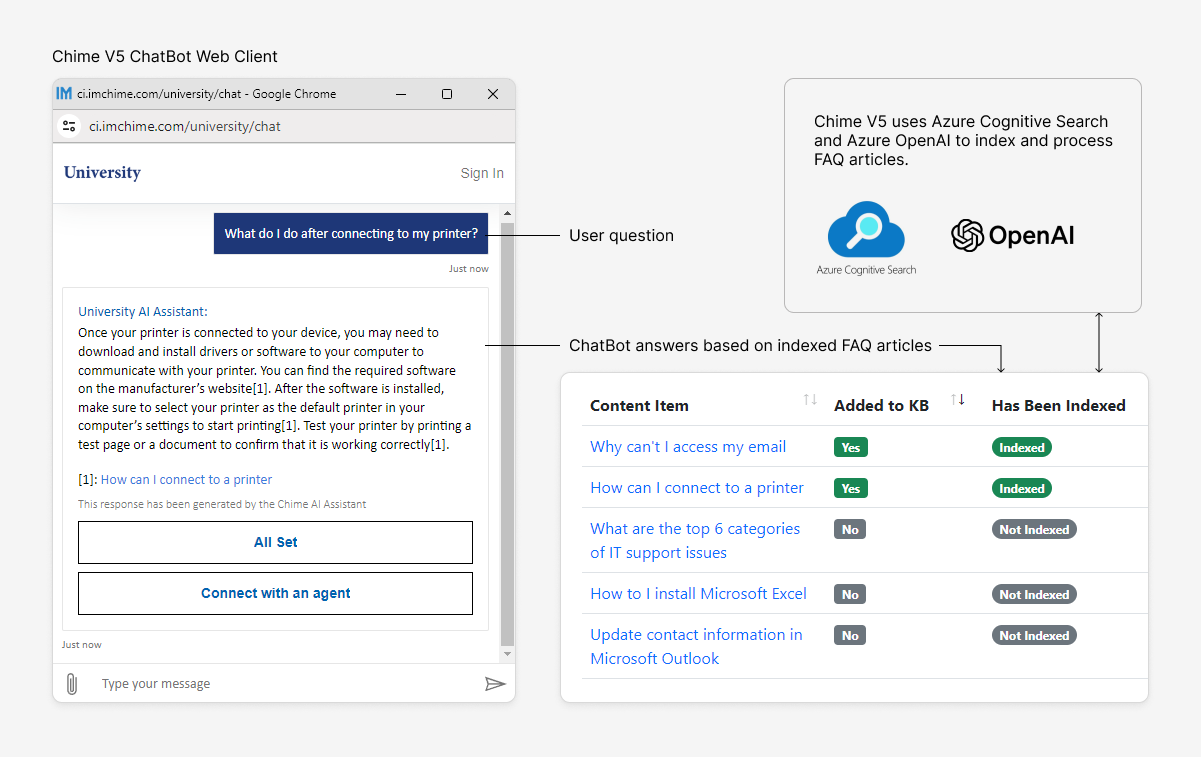

After working to build AI helpdesk assistants for a while, we’ve discovered that the prompt is incredibly important in how well AI answers user questions. By making better prompts, we can get the AI to provide more accurate and helpful responses to employees. We’ve learned some things that work well to improve prompts and wanted to share that knowledge so you can work your way up from initial basic prompts up to more complex and impactful ones.

This page will cover:

Basic Prompting with goals and formatting

Prompts that Present Answers with Specific Formatting

Pass Azure AD and Microsoft Entra ID values to into the prompt to personalize the response - VIP escalation

Utilizing Additional Goals and Response Guidelines to Improve Prompts

Create prompts to ‘event back’ if the user seems frustrated to needs to route to a human

Use our AI Prompt Sandbox to create and refine valuable AI prompts

Getting Started: Basic Prompting

A basic prompt for an AI assistant should include a few things that will make it function better than just the base model:

Clear Context – Provide background information so AI understands the situation (e.g., "You are an IT helpdesk assistant for a large enterprise help desk"). The more context and background information you can give, the better.

Goals – Giving the AI a goal in the prompt helps it model it’s responses back to the user

Specific Instructions – Tell the AI exactly what to do, whether it’s answering a question, summarizing, or troubleshooting. In the example prompt, we give it instructions on how to structure it’s responses with regard to sentence structure, grammar, etc…

Expected Output Format – Request responses in a structured way (e.g., bullet points, step-by-step guides, or FAQs).

Updating to use Prompts that Present Answers with Specific Formatting

One way to update your prompts to provide better answers is to give the prompt some specific guidelines on the format it needs to respond with. In the example below we want our AI to utilize a lot of our high quality training material from KnowledgeWave, so we provide it with rules and examples on how to do so. Some key things to consider adding into your own prompts from this example are:

Expected Results to Return – Use the prompt to tell the AI you want it to return a specific type of content in it’s responses (e.g., Training Videos, FAQ Articles, PDF Documents, PowerPoint Presentations)

Response Rules – Provide specific instructions on how responses should be formatted, this specifically is in regards to content formatting, so in this example we are indicating that URLs need to be complete and from a certain domain.

Examples for Clarity – Give sample responses or formats to guide AI’s style and accuracy. This gives the AI a reference point on how to structure a response when it encounters a specific condition that you asked it to respond to. Typically when doing this, you can give it rules to follow, then give an example question and answer sequence that it can borrow from to inform the way it responds to live chats.

Reiterate Rules – While the AI usually takes the instructions you set for it and implements them correctly, sometimes you need to be more insistent on rules to ensure that it never deviates from the instructions you have given it. Adding in rules a second time at the end of the prompt can help to ensure it keeps responses consistent to the formatting you want it to adhere to. Sometimes, using slightly different wording in the second time you mention a rule can also help it better understand what you want from it.

Adding in Employee Personalization to the Prompts

We are able to update prompts to better utilize what the AI will know about the user looking for help using Microsoft Entra ID (Azure AD). This could be as simple as letting the AI know what the user’s first name is so it can be more personable and friendly. Alternatively, you could let the AI see the users job title or office location so that it can provide specific information that would be directly relevant to the user.

We are able to pull user profile data using Azure Entra ID/Active Directory. Using this, would be able to pull name, jobTitle, officeLocation, country, department, employeeType, and various other info. To see more properties available, check out the Microsoft Entra ID user profile documentation here. In these examples, we are using variable swap-out with the AI to use metadata values we have that cover end user data, such as:

${Guest.GivenName} ${Guest.JobTitle} ${Guest.OfficeLocation}

User-Specific Information – The AI can contextualize responses rather than providing generic, one-size-fits-all answers. Personalization improves user engagement and builds trust by making the AI feel more responsive to individual needs. We are using this to try and make interactions feel less robotic and more conversational, and enhance engagement with the users.

Role-Based Language – We want he AI to adapt its tone, terminology, and level of detail based on the user’s job title. Message responses that match technical proficiency and job/industry specific language are important. For example, a developer or IT admin asking about their ticketing system may need specific API documentation in a response, while a sales representative asking a similar questions about ticketing would likely rather have explanations with direct action steps and wouldn’t care much for the underlying structure.

Location Relevance – We are trying to benefit from knowing the user location for more useful and accurate answers. If a user asks about nearest IT support, the AI doesn’t give generic instructions but provides an answer based on their actual office location. Additionally, if they ask about regional compliance, the AI can try it’s best to reference location-specific content or branch-specific policies. If a US-based employee and a Spain-based employee have the same question, it’s likely they will get better help if the AI can tailor it’s responses to each of them.

Feedback & Continuous Improvement – We want the model to be more aware that it might not have the perfect answer and look to have confirmation on if it is answering questions correctly. If the user lets it know it needs help in a different way, the AI will ask for more details rather than making assumptions again.

Utilizing Additional Goals and Response Guidelines to Improve Prompts

In this example prompt, we are trying to give additional goals and response guidelines to the AI model that are a bit more specific to your use case than we did in the first example prompt. Here we want to focus on honing in what information the AI has accessible to it, how to handle situations where it is not fully sure on how to answer questions, as well as getting context on when to use technical language vs when to avoid it. Here are some points to consider when trying to continue enhancing your chat prompts:

Company-Specific Knowledge – Reference internal technologies, terminology, or resources to improve relevance. In this example we are trying to convey information to customers about the chat service and the systems we use, so including additional information in the prompt about services we would like the AI to know about is very helpful for guiding it into good responses.

Handling Unknowns – Direct AI on what to do if it lacks enough information (e.g., "If uncertain about the intent of a question, provide an answer to the most likely topic based on context."). Often times we find that if the AI model does not know the exact answer, it will spend time asking multiple clarifying questions to make sure it gives the perfect answer, but if you have a good set of FAQ and Documents in your tenant, often times it will give a perfectly good answer even without full context.

Follow-Up Suggestions – Encourage AI to ask if it’s response was good so the user has an opportunity to ask the question differently if the response the AI returned was not quite what they wanted (e.g., "Was this information helpful? If not, please let me know so I can assist further.")

User-Friendly Language – Ensure AI responds in a way that’s easy for the user to understand, avoiding overly technical jargon unless the way the users asks about it indicates that they have technical knowledge applicable to a more detailed and technical response.

Proactiveness – Ask the AI model to be proactive and think of common follow-up questions the user might have. If you have specific examples of common questions you get into your helpdesk, this would also be a great spot to include some specific examples of question and answer sequences like we did in a previous section.

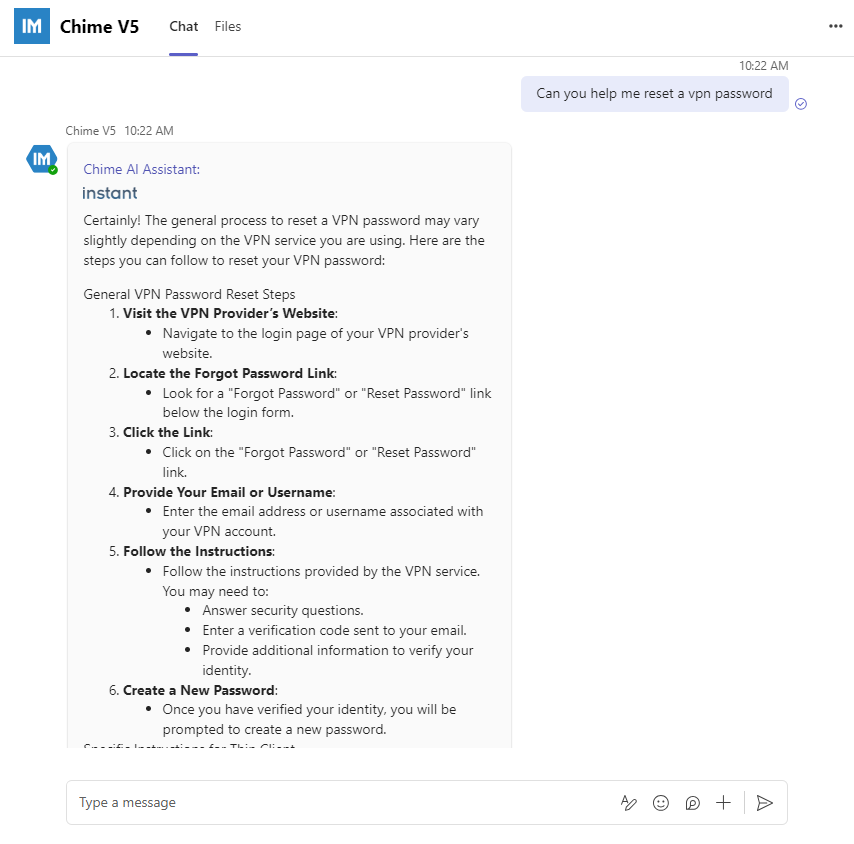

Creating Prompts that Provide Alternate Routing Options Based on Chat Interactions

When talking with the chatbot, the end user will likely indicate at some point that they are done talking with the AI. When that happens, we want to be ready to direct them there in a conversational way. This could include offering options such as ending the chat, connecting with a live agent, or directing them to relevant resources based on their conversation history. By designing prompts that naturally guide users to the next step, we can enhance the user experience and ensure a smooth transition, whether they need further assistance or are ready to exit the interaction. We are also able to have the AI return messages that use slash commands such as /deflect /end or /agent. These slash commands allow the chat service to navigate the user to a different part of the chat workflow smoothly and conversationally so the user never feels overwhelmed about what to do next.

Use our AI Prompt Sandbox to Create and Refine Valuable AI Prompts

Refining an AI prompt can take some time and multiple iterations to get it to a point where you are happy with all of the responses that it can provide. To assist with this, we have added in a sandbox where you can test the prompt, make changes in real-time, and see the new output. This has been very crucial to efficiently testing and updating prompts. By allowing instant feedback and adjustments, the sandbox helps streamline the refinement process, reducing trial-and-error frustration. It also enables users to experiment with different phrasing, structures, and parameters to optimize accuracy and relevance.

To get started with this:

Navigate to the Manager Dashboard.

Under Chat Development click on the AI Prompt Library menu option.

If you have not added a prompt in here yet, you can do so using the New AI Prompt Library Item button in the top-right side of the page. Then fill out the prompt and click publish on the bottom of the page.

Click the Test Prompt button on the right side of the prompt you want to test out.

In the prompt playground you can edit the prompt on the left side menu and any changes will take effect in the prompt messages you receive in the chat area on the right.

Note: If you are using any links or metadata values in this playground, they may not display the same way as they would in a real chat as it does not have that accessible. In the below example you can see that metadata variables are shown as ${Guest.GivenName}, but in a real chat that value will get swapped out with the first name of the Guest/End User.